As artificial intelligence reshapes business, constructing an optimal AI solutions framework, or architecture, remains crucial for enterprises seeking to harness these innovations. The foundational pillars of a strong architecture are more relevant than ever, but their meaning and implementation have evolved dramatically with the advent of Generative AI. To build a future-proof AI ecosystem, leaders must reinterpret these key criteria for the modern era.

Below are your six key pillars, updated to reflect the knowledge and best practices of today.

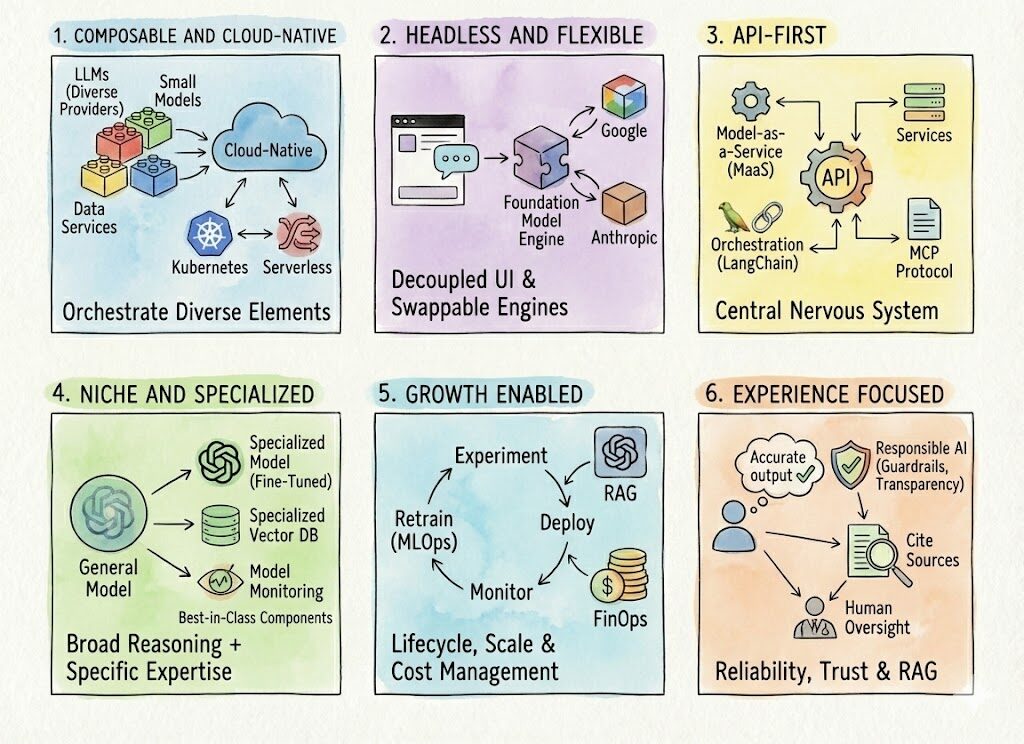

𝟭. 𝗖𝗼𝗺𝗽𝗼𝘀𝗮𝗯𝗹𝗲 𝗮𝗻𝗱 𝗖𝗹𝗼𝘂𝗱-𝗡𝗮𝘁𝗶𝘃𝗲

The vision of technology components as 𝘓𝘦𝘨𝘰 𝘣𝘭𝘰𝘤𝘬𝘴 is now a reality at a far grander scale. In today’s architecture, composability means orchestrating a diverse set of elements: large language models (LLMs) from different providers, specialized smaller models, vector databases for semantic search, and traditional data services. Cloud-native solutions are non-negotiable, providing the immense, on-demand GPU/TPU processing power required for model inference and fine-tuning. Modern AI stacks rely heavily on containerization with Kubernetes for managing complex deployments and serverless functions for scaling inference endpoints efficiently and cost-effectively.

𝟮. 𝗛𝗲𝗮𝗱𝗹𝗲𝘀𝘀 𝗮𝗻𝗱 𝗙𝗹𝗲𝘅𝗶𝗯𝗹𝗲

Decoupling the user interface from the underlying engine is now a critical strategy for navigating the rapid evolution of AI models. The 𝘵𝘦𝘤𝘩 𝘦𝘯𝘨𝘪𝘯𝘦 is frequently a foundation model, and a headless configuration grants businesses the agility to swap this engine out at will. For instance, a customer service chatbot’s front-end can remain constant while the backend is upgraded from one model generation to the next, or even switched between providers (e.g., from Google to Anthropic) to leverage better performance or pricing. This flexibility is essential for avoiding vendor lock-in and continuously optimizing the intelligence layer of your applications.

𝟯. 𝗔𝗣𝗜-𝗙𝗶𝗿𝘀𝘁

APIs have solidified their role as the central nervous system of any AI ecosystem. The paradigm has matured into a “𝘔𝘰𝘥𝘦𝘭-𝘢𝘴-𝘢-𝘚𝘦𝘳𝘷𝘪𝘤𝘦” (MaaS) economy, where virtually all state-of-the-art AI capabilities are consumed via API calls. An API-first architecture enables complex AI workflows, with orchestration frameworks (like LangChain) chaining API calls to create intelligent agents. Looking forward, this “𝘈𝘗𝘐 𝘢𝘴 𝘢 𝘤𝘰𝘯𝘵𝘳𝘢𝘤𝘵” is evolving towards standardized protocols like the MCP (Model Context Protocol). Such standards aim to create a universal format for packaging and transmitting context—including conversational history and retrieved data—to any compliant model, further enhancing interoperability.

𝟰. 𝗡𝗶𝗰𝗵𝗲 𝗮𝗻𝗱 𝗦𝗽𝗲𝗰𝗶𝗮𝗹𝗶𝘇𝗲𝗱

While massive, general-purpose foundation models seem like the ultimate jack-of-all-trades, the principle of specialization remains vital, albeit in a more nuanced way. The optimal strategy now involves using these large models for broad reasoning while applying specialization in two key areas:

Specialized Models: For high-frequency, domain-specific tasks, fine-tuning smaller models on your proprietary data often yields a more accurate, faster, and cost-effective solution.

Specialized Tooling: The AI stack itself is composed of best-in-class, specialized components, from a specialized vector database for search to a specialized platform for model monitoring. The generalist AI is powered by a team of specialists.

𝟱. 𝗚𝗿𝗼𝘄𝘁𝗵 𝗘𝗻𝗮𝗯𝗹𝗲𝗱

A growth-enabled architecture has evolved from a high-level goal to a set of concrete technical requirements. First, it must support the complete AI lifecycle (MLOps/LLMOps), from experimentation to deployment, continuous monitoring for drift, and systematic retraining. Second, it must be engineered to scale enterprise-specific patterns like Retrieval-Augmented Generation (RAG). Finally, growth enablement now includes rigorous cost management (FinOps for AI), ensuring that as usage scales, the economic viability of the AI solutions is maintained.

𝟲. 𝗘𝘅𝗽𝗲𝗿𝗶𝗲𝗻𝗰𝗲 𝗙𝗼𝗰𝘂𝘀𝗲𝗱

Democratizing AI solutions is more critical than ever, but the definition of a good experience has expanded significantly. User-friendliness now centers on the quality and reliability of the AI’s output—its accuracy, coherence, and speed. Architecturally, this means implementing systems like RAG to reduce factual errors (“hallucinations”). Furthermore, the user experience is now intrinsically linked to trust. An experience-centric architecture must therefore incorporate Responsible AI principles directly, featuring built-in guardrails, transparently citing sources for its claims, and providing clear pathways for human oversight.